Perhaps after all the work of making and calibrating your multicopter, you prepare your modified NIR camera, test the filters, stitch all the images together and get the final NDVI image through the PhotoMonitoring plugin and you may feel a bit dissapointed with the result. It was a hard work, the picture is beautiful, nice colors, etc.. but I feel there is something there but I couldn't tell what. At least this was my case, but it was before discovering the wonderful power of ImageJ. So I encourage you to go one step forward and exploit a little more that NDVI image so you can talk a bit more about it.

The features you may need depend on what is your photo about, but probably you may need to count specific elements in your photo (number of trees), or you may need to distinguish one type of elements from others (crop vs weed) and get differenciated measurements for each type.

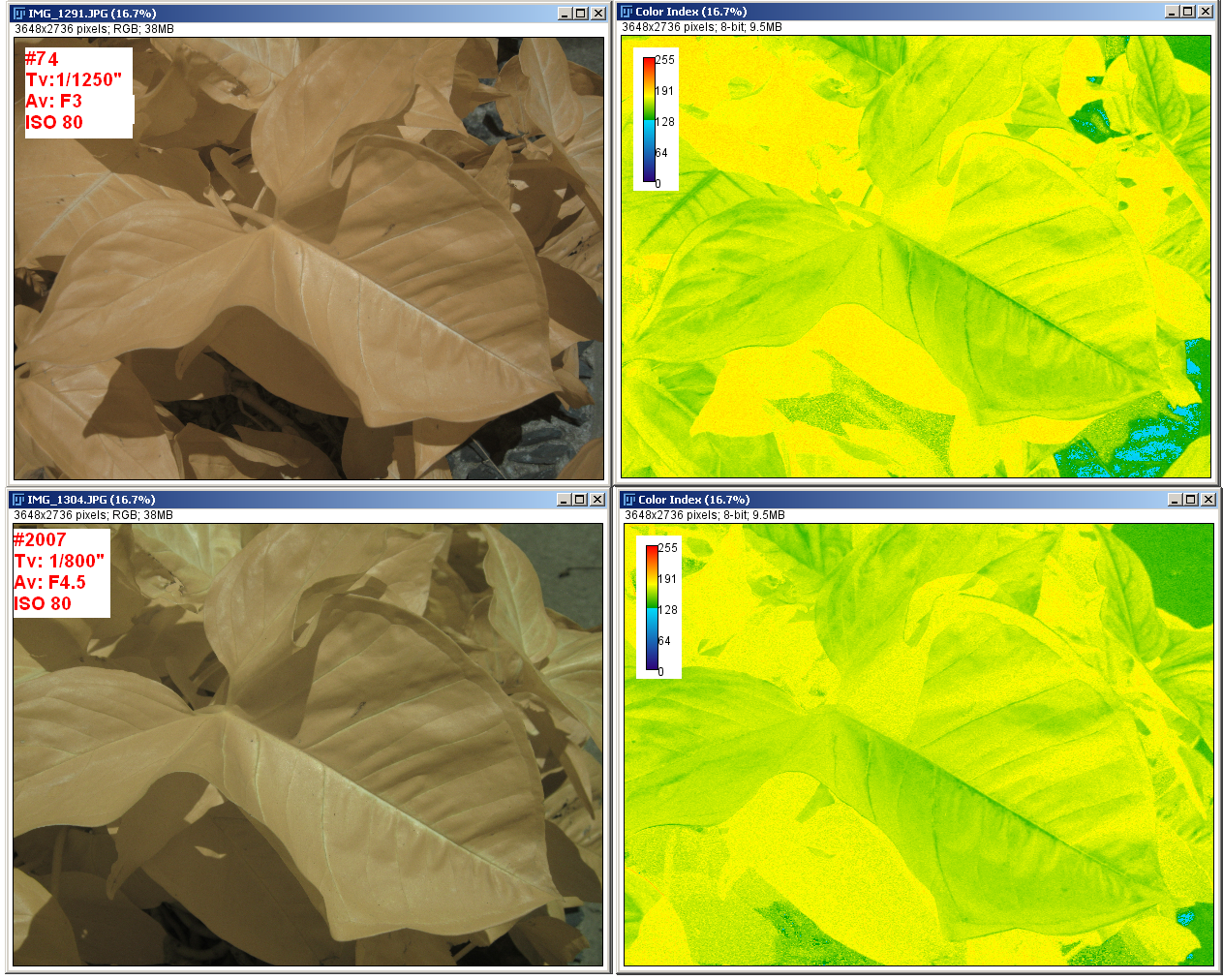

Here I present a simple sample of some of the feautures for a olive grove photo. This is the original NIR photo and the simple direct NDVI obtained with PhotoMonitoring Plugin of Fiji.

It is not easy to get much information from this NDVI image, the plugin maps from NDVI values ranging -1 to 1 to 0-255 and that values to color using the LUT. Healthy plants have NDVI values between 0.3 and 0.7, so we could limit the NDVI values to that range and map that range to 8bit color image, that way with the same color range (LUT) we could distinguish better the differences between the plants appearing in the photo. That is the most I expect to do, as I cannot trust the NDVI absolute values of this camera.

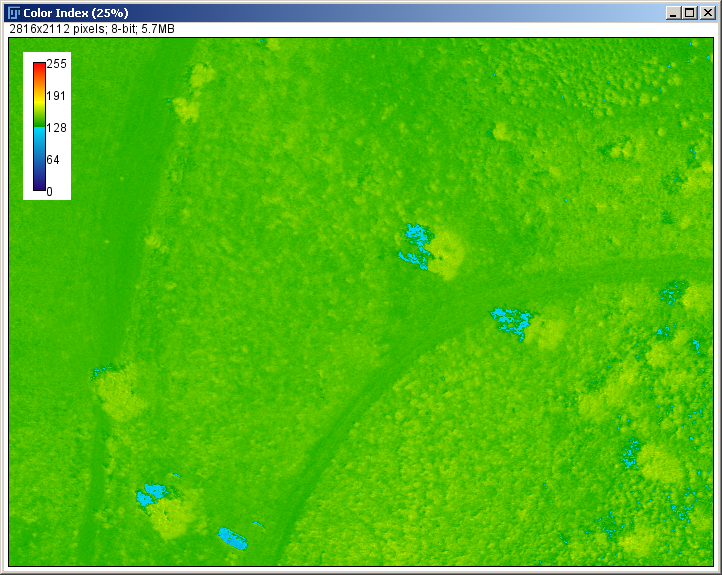

The floating point image containing NDVI information is a 32 bit depth grey scale image which we can easily threshold in Fiji to distinguish photosynthetic elements from the rest. The threshold feature classify gray scale images (there is also a color threshold) so values inside the threshold range are in in one group and the values outside it are in other group, so you can obtain statistics and use other features based on this classification.

|

| 32bit gray scale NDVI image [-1,1] |

When applying the threshold (Image->Adjust-> Threshold) you are going to see the histogram of the image and two sliders for the bottom and top limits of the threshold (remember these are ndvi values as calculated by the plugin). I select just the zone of interest so I am sure that the values I am using for threshold correspond to the plants I want to study. There are several algorithms to be used but for now the default one is good. Mark "Dark background" if the elements of interest are brighter than the background, as in this case and choose the output colors for the thresholded image (B&W in this case).

|

| Threshold window |

I think it is good to inspect the image with the mouse to have an idea of the values you are working with, I saw that in this image the trees never had values less than 0.1, so that gave me an idea of the lower limit for the threshold, with that in mind you can move the low limit slider until you see that just the trees an plants are visible, the top limit I leave it in 1.

|

| NDVI thresholded image to [0.1,1] |

Once the image is thresholded you will see a window with the results, that contains the information you are looking for, the lower and top limits of the NDVI values in the plants [0.106, 0.460], so I will use that range in the plugin to get a more easily interpretable color range in my result image:

|

| Photomonitoring plugin values |

I don't stretch the bands as all the information I have is meaninful and I don't want to remove any pixel.

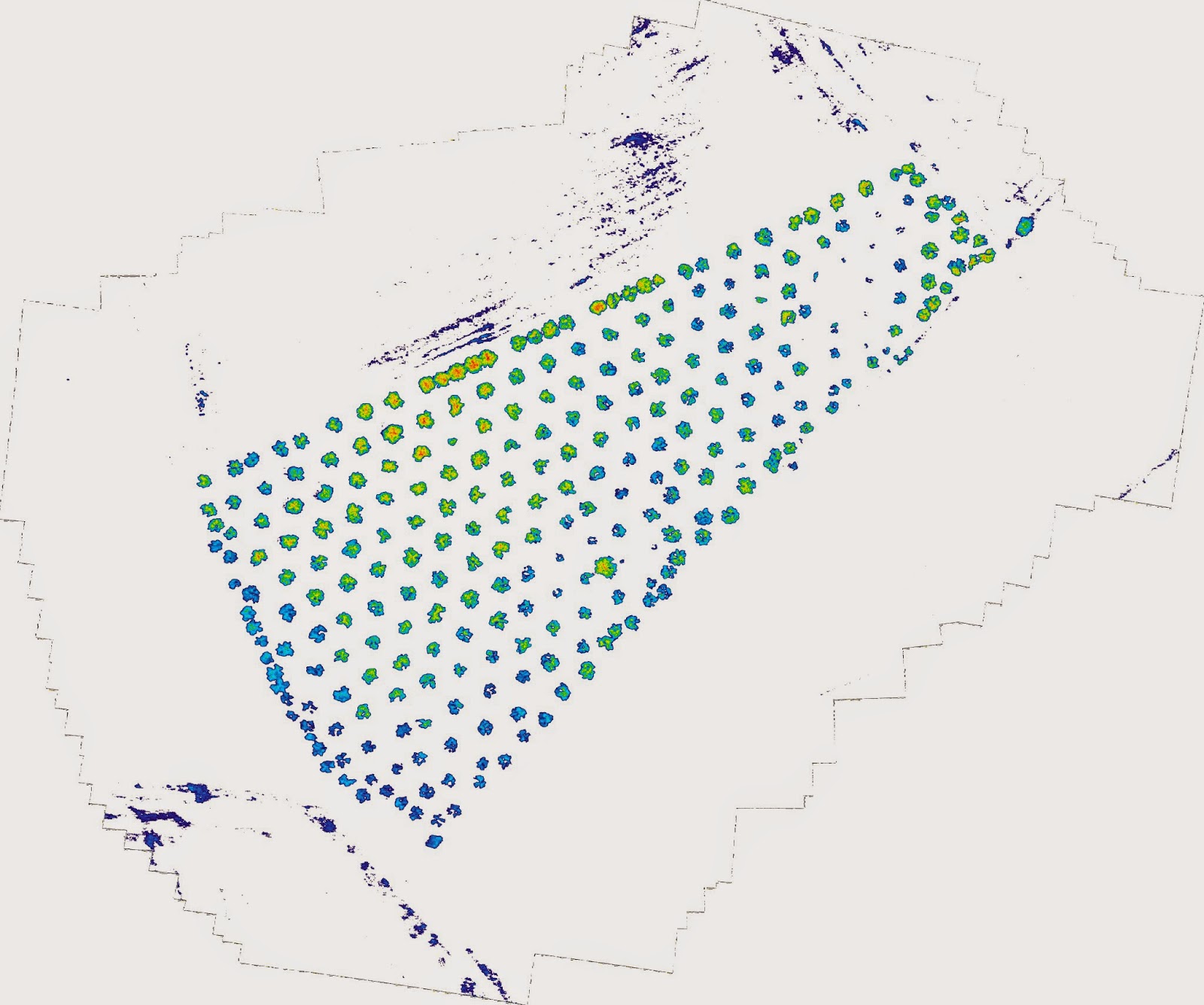

This is the new result, where it is more easily interpretable some zones in the grove where the ndvi values are higher than in others (more yellow and red colors)